Integrate your Certainly bot with OpenAI

Interested in testing how Generative AI (GAI) or Large Language Models (LLMs) might improve your chatbot's functionality or your bot-building workflows? Now you can! We've designed a solution that allows you to experiment with GPT-3.5 inside the Certainly Platform.

Below, we'll explain how to integrate with OpenAI to access GPT-3.5 from your bot, and we'll share customization information for more advanced users.

Chatbot configuration

Follow the steps below to connect your Certainly bot with OpenAI using our Webhook Template and recommended Module setup. The configuration described here will allow your bot to handle user messages that would otherwise go to a generic Fallback.

- Generate a new API Key from your OpenAI admin dashboard. You can read more about it in OpenAI's documentation.

- In Certainly's Webhook Marketplace, create a Webhook from the OpenAI Webhook Template. Configure the Webhook to use the API Key generated in the previous step.

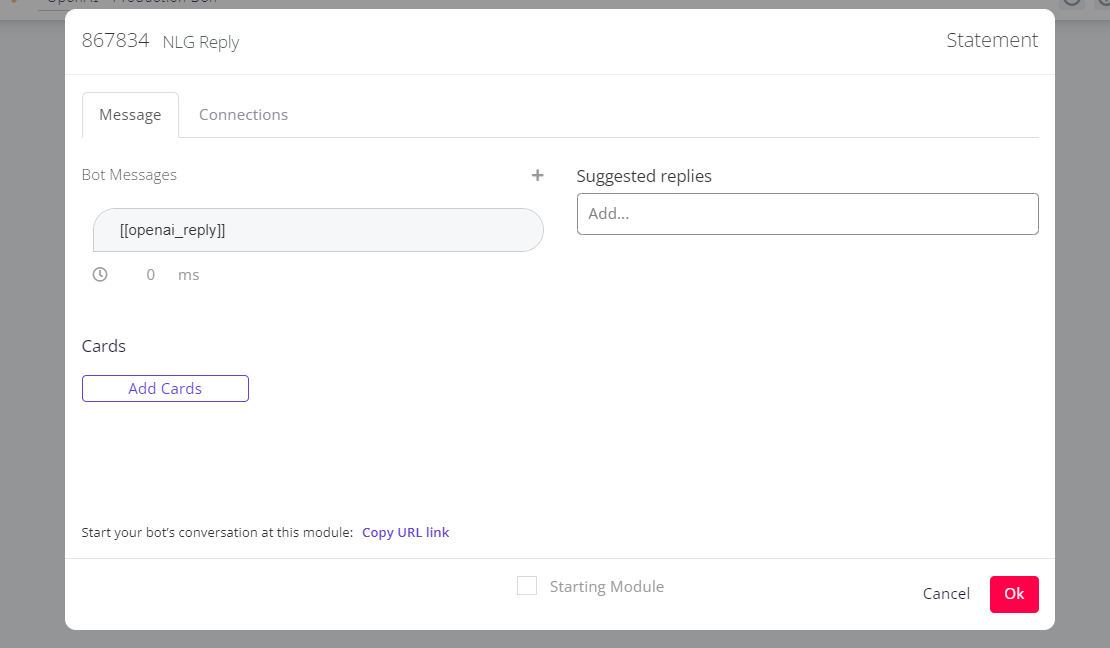

- Within the chatbot you want to connect to OpenAI, create a new landing Module to display the answer from OpenAI.

This simple Module will have only one message bubble containing "[[openai_reply]]". During conversation time, the value of the Custom Variable "openai_reply" will be replaced dynamically with the answer from OpenAI.

See below for an example in which this landing Module is titled "NLG Reply" :

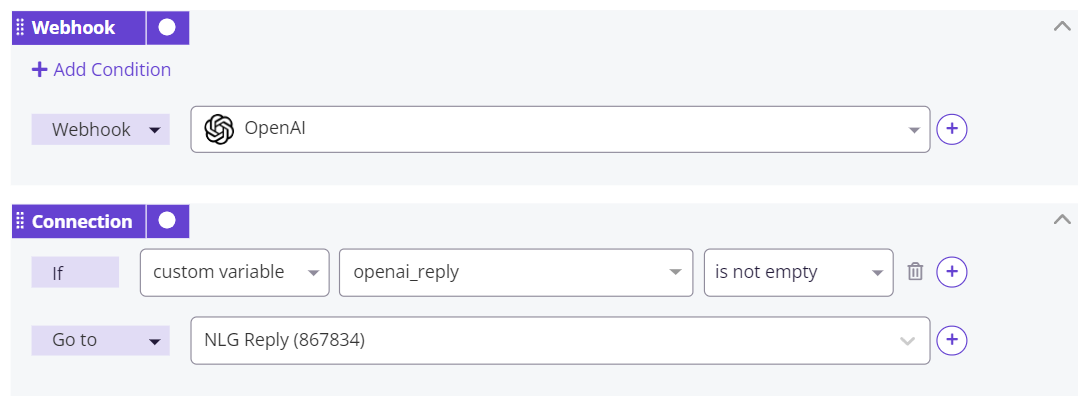

- From the right sidebar menu, open Global Connections and create the two connections described below. Ensure they are placed at the bottom of the list.

- The first connection executes the request to OpenAI. Remove the condition and select the "Webhook" connection type. Then choose the Webhook you created in Step 2.

- The second connection enables the OpenAI response to be displayed once an answer has been received. Establish an "If custom variable openai_reply is not empty" condition, then set the connection to "Go to" the Module you created in Step 3.

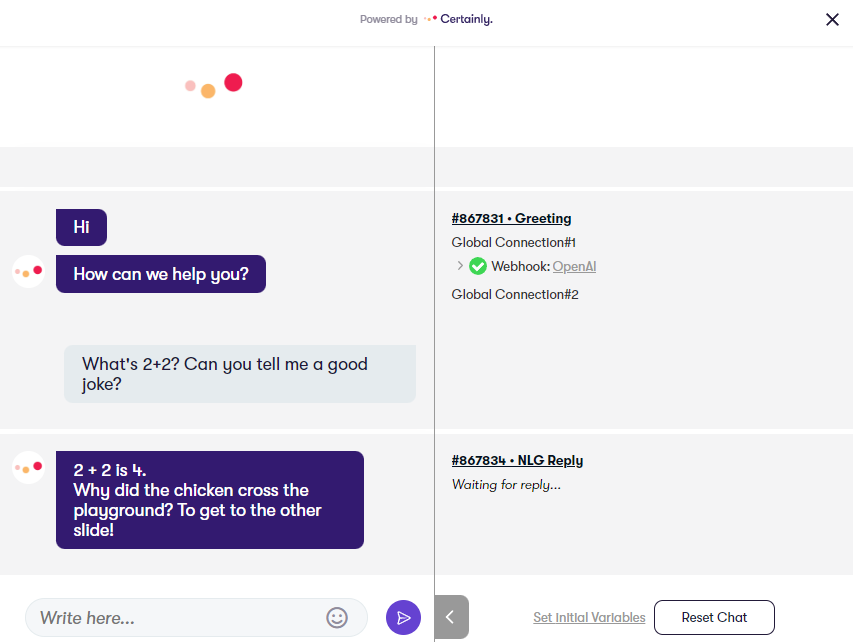

Since OpenAI is integrated as the final Global Connection, your bot will prioritize the connections that route to other conversational flows. With this setup, your chatbot will rely on OpenAI to handle users' messages that would otherwise go to a generic fallback. - You can now test your chatbot! An example of the flow is as follows:

Customize prompts sent to OpenAI

More advanced users can customize the prompt sent to OpenAI by editing the Webhook.

To do so, open the OpenAI Webhook instance you created in Step 2 of the previous section. In the Body section of the Webhook, adjust the prompt to your liking.

Some examples are available in OpenAI's documentation.