Generative AI Advanced Settings

Generative AI Advanced Settings enhance control over AI responses, allowing for fine-tuning through semantic filters, personalized variables, and additional processing to ensure replies are relevant and aligned with business goals.

This help center article will provide you with a deeper understanding of how Generative AI advanced settings work and how to implement them on our platform. The topics covered include:

- How Advanced Settings Impact Generative Responses

- Adding Dynamic Content and Custom Variables to the Guidelines

- Working with Semantic Filters

- Processing Generative Replies Before They Are Shown to Users

How Advanced Settings Impact Generative Responses

Advanced settings in Certainly's generative AI feature allow for finer control over what impacts the generation of replies by means of scripting advanced guidelines to complement static ones, conditioning semantic filters for the knowledge base, processing the generated reply before it is sent back to the end user.

You find Advanced Settings under the basic Generative AI Guidelines editor:

Adding Dynamic Content and Custom Variables to the Guidelines

Certainly's generative AI also allows for the inclusion of dynamic content and custom variables in your guidelines. This feature enhances the personalization and relevance of chatbot responses by pulling in dynamic content such as current locale, promotions, inventory levels, or user-specific data to provide up-to-date and personalized responses.

The guidelines specified using the simple UI settings are exposed via custom variable user_prompt. You can define a separate variable that appends information using a Jinja template block.

The Jinja script above will append a generic guideline, where part of it is populated using variable locale.

If locale is set to "de", then the actual guideline injected during conversation time is:

* ACME does have physical stores in some countries. You can find the ACME stores closest to you and their opening hours [here](https://www.ACME.com/de/stores-find)

If you're unsure how to apply these settings to serve your use case, we’d love to hear from you! Reach out to us, and we'll help you achieve the best custom generative behavior for your scenarios.

Working with Semantic Filters

Semantic filters allow you to narrow the knowledge base used in generating the assistant's reply. A classic example is to ensure the generative reply is based on knowledge about a specific region, or a specific brand.

The filters can be set dynamically depending on the context of the conversation, and can also be overridden throughout the conversation.

Tagging knowledge base resources

Before you filter for specific knowledge base content, that content needs to be tagged. It is possible to tag content based on the locale, brand and other relevant information for your use-case.

Currently, knowledge base processing and tagging is only available through EAP, but reach out to your Technical Account Manager or inquire about this via the Contact Us page.

Filter for specific language or locale

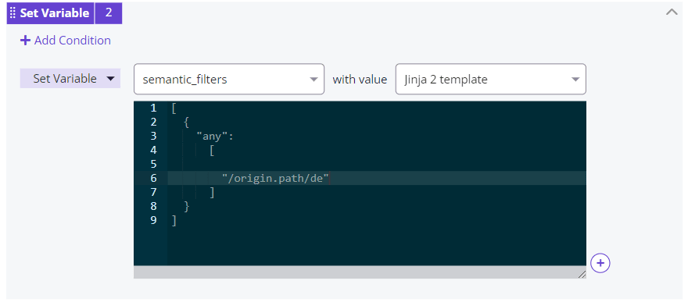

Provided that your knowledge base document is tagged with "de" at the origin path, then you can filter for it by specifying the following semantic_filters

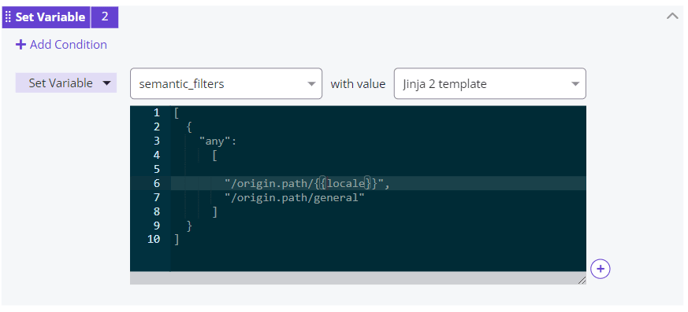

Filter for specific language locale and/or a general locale

Sometimes you might have documentation that applies across all languages or locales. In this scenario, you may want to anchor the generative replies to either the specific language locale associate with this conversation, or generic knowledge base pool, applicable to all locales:

Filter for a specific brand

For companies with different brands, the assistant can be configured to search within a specific brand's knowledge base, ensuring that responses are relevant to the brand associated with the ongoing conversation.

Combining logic operators for advanced filtering

You can combine filters using the "any" and "all" operators. For example, you may want to filter down for (1) knowledge base of a specific brand, and (2) any knowledge base associated with either the current locale or the default locale. You can do so by specifying:

[

{

"all":

[

"/classification.labels/brand/{{my_brand}}"

]

},

{

"any":

[

"/origin.path/{{locale}}",

"/origin.path/general"

]

}

]

Make sure that your configuration populates variables such as {{my_brand and {{locale}} with appropriate values during conversation time.

Processing Generative Replies Before They Are Shown to Users

Before generative replies are presented to users, they can be processed further to meet specific requirements. This additional processing ensures the final output is polished and suitable for user interaction.

Replacing words in generated replies

Sometimes, certain words or phrases might be undesirable in a response. This might be for brand-compliance, should your brand employ specific verbiage for certain words.

You can do so via a Jinja scripting block that is executed after the reply is generated.

Setting Variables Based on the Topic of the Reply

Responses can be customized further by setting variables that adjust the content based on the topic. This can include personalizing responses with user names, addressing specific products or services, or modifying the tone.

Example: In this conversation, the customer needs to update their password, the Generative AI understands the request and captures the customer's request in a variable.

By leveraging these advanced settings and processing techniques, Certainly's generative AI can deliver more precise, relevant, and user-friendly interactions, enhancing the overall user experience and achieving better alignment with business goals.